Frequency probability

Frequency probability is the interpretation of probability that defines an event's probability as the limit of its relative frequency in a large number of trials. The development of the frequentist account was motivated by the problems and paradoxes of the previously dominant viewpoint, the classical interpretation. The shift from the classical view to the frequentist view represents a paradigm shift in the progression of statistical thought. This school is often associated with the names of Jerzy Neyman and Egon Pearson who described the logic of statistical hypothesis testing. Other influential figures of the frequentist school include John Venn, R.A. Fisher, and Richard von Mises.

Contents |

Definition

Frequentists talk about probabilities only when dealing with well-defined random experiments. The set of all possible outcomes of a random experiment is called the sample space of the experiment. An event is defined as a particular subset of the sample space that you want to consider. For any event only one of two possibilities can happen; it occurs or it does not occur. The relative frequency of occurrence of an event, in a number of repetitions of the experiment, is a measure of the probability of that event.

Thus, if  is the total number of trials and

is the total number of trials and  is the number of trials where the event

is the number of trials where the event  occurred, the probability

occurred, the probability  of the event occurring will be approximated by the relative frequency as follows:

of the event occurring will be approximated by the relative frequency as follows:

.

.

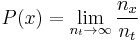

A further and more controversial claim is that in the "long run," as the number of trials approaches infinity, the relative frequency will converge exactly to the probability:[1]

.

.

One objection to this is that we can only ever observe a finite sequence, and thus the extrapolation to the infinite involves unwarranted metaphysical assumptions. This conflicts with the standard claim that the frequency interpretation is somehow more "objective" than other theories of probability.

Scope

This is a highly technical and scientific definition and doesn't claim to capture all connotations of the concept 'probable' in colloquial speech of natural languages. Compare how the concept of force is used by physicists in a precise manner despite the fact that force is also a concept in many natural languages, used in religious texts for example. However, this seldom causes problems or confusion, as the context usually reveals if it's the scientific concept that is intended or not.

As William Feller noted:

- There is no place in our system for speculations concerning the probability that the sun will rise tomorrow. Before speaking of it we should have to agree on an (idealized) model which would presumably run along the lines "out of infinitely many worlds one is selected at random..." Little imagination is required to construct such a model, but it appears both uninteresting and meaningless.

History

The frequentist view was arguably foreshadowed by Aristotle, in Rhetoric,[2] when he wrote:

the probable is that which for the most part happens[3]

It was given explicit statement by Robert Leslie Ellis in "On the Foundations of the Theory of Probabilities"[4] read on 14 February 1842,[2] (and much later again in "Remarks on the Fundamental Principles of the Theory of Probabilities"[5]). Antoine Augustin Cournot presented the same conception in 1843, in Exposition de la théorie des chances et des probabilités.[6]

Perhaps the first elaborate and systematic exposition was by John Venn, in The Logic of Chance: An Essay on the Foundations and Province of the Theory of Probability (1866, 1876, 1888).

Etymology

According to the Oxford English Dictionary, the term 'frequentist' was first used[7] by M. G. Kendall [1] in 1949,[8] to contrast with Bayesians, whom he called "non-frequentists" (he cites Harold Jeffreys). He observed

- 3....we may broadly distinguish two main attitudes. One takes probability as 'a degree of rational belief', or some similar idea...the second defines probability in terms of frequencies of occurrence of events, or by relative proportions in 'populations' or 'collectives'; (p. 101)

- ...

- 12. It might be thought that the differences between the frequentists and the non-frequentists (if I may call them such) are largely due to the differences of the domains which they purport to cover. (p. 104)

- ...

- I assert that this is not so ... The essential distinction between the frequentists and the non-frequentists is, I think, that the former, in an effort to avoid anything savouring of matters of opinion, seek to define probability in terms of the objective properties of a population, real or hypothetical, whereas the latter do not. [emphasis in original]

Alternative views

See Probability interpretations

See also

Notes

- ^ Richard von Mises, Probability, Statistics, and Truth, 1939. p.14

- ^ a b Keynes, John Maynard; A Treatise on Probability (1921), Chapter VIII “The Frequency Theory of Probability”.

- ^ Rhetoric Bk 1 Ch 2; discussed in J. Franklin, The Science of Conjecture: Evidence and Probability Before Pascal (2001), The Johns Hopkins University Press. ISBN 0801865697 , p. 110.

- ^ Ellis, Robert Leslie; “On the Foundations of the Theory of Probabilities”, Transactions of the Cambridge Philosophical Society vol 8 (1843).

- ^ Ellis, Robert Leslie; “Remarks on the Fundamental Principles of the Theory of Probabilitiess”, Transactions of the Cambridge Philosophical Society vol 9 (1854).

- ^ Cournot, Antoine Augustin; Exposition de la théorie des chances et des probabilités (1843).

- ^ Earliest Known Uses of Some of the Words of Probability & Statistics

- ^ Kendall, Maurice George (1949), "On the Reconciliation of Theories of Probability", Biometrika (Biometrika Trust) 36 (1/2): 101–116, JSTOR 2332534

References

- P W Bridgman, The Logic of Modern Physics, 1927

- Alonzo Church, The Concept of a Random Sequence, 1940

- Harald Cramér, Mathematical Methods of Statistics, 1946

- William Feller, An introduction to Probability Theory and its Applications, 1957

- M. G. Kendall, On The Reconciliation Of Theories Of Probability, Biometrika 1949 36: 101-116; doi:10.1093/biomet/36.1-2.101

- P Martin-Löf, On the Concept of a Random Sequence, 1966

- Richard von Mises, Probability, Statistics, and Truth, 1939 (German original 1928)

- Jerzy Neyman, First Course in Probability and Statistics, 1950

- Hans Reichenbach, The Theory of Probability, 1949 (German original 1935)

- Bertrand Russell, Human Knowledge, 1948

- John Venn, The Logic of Chance, 1866

External links

- Charles Friedman, The Frequency Interpretation in Probability PS

- John Venn, The Logic of Chance

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||